News gathered 2024-12-21

(date: 2024-12-21 07:11:20)

Disney Princesses Are at Risk of Rabies and Fatal Maulings

date: 2024-12-21, from: 404 Media Group

This week, the creature from the Pangean lagoon, casket shopping for Disney princesses, a horror show in ancient Somerset, and “Martifacts.”

https://www.404media.co/disney-princesses-are-at-risk-of-rabies-and-fatal-maulings-3/

Christmas Classics

date: 2024-12-21, from: Status-Q blog

The oral tradition has long been an important part of preserving human culture, and it is perhaps especially at this time of year that we’re conscious of works of music and literature that have been handed down through the ages. While I was showering this morning, for example, I found myself singing a cheerful seasonal Continue Reading

https://statusq.org/archives/2024/12/21/12311/

@Dave Winer’s linkblog (date: 2024-12-21, from: Dave Winer’s linkblog)

Major Tesla investor criticizes Musk's embrace of far-right politics.

https://m.youtube.com/watch?v=SniddTMVYvE

The Thrill Was Never There

date: 2024-12-21, from: Tedium site

A famous punk-music personality reveals he was in it for the money—a revelation that has upset fans. But to be fair, it was the algorithm that pushed him in that direction.

https://feed.tedium.co/link/15204/16925582/punk-rock-mba-youtube-quitting

@Dave Winer’s linkblog (date: 2024-12-20, from: Dave Winer’s linkblog)

Amazing that the tech industry hasn't tried to retrieve its reputation from the ones who are repping us in DC nowadays. Software doesn't have to treat their users like nobodies.

http://scripting.com/2024/12/20.html#a230615

OpenAI o3 breakthrough high score on ARC-AGI-PUB

date: 2024-12-20, updated: 2024-12-20, from: Simon Willison’s Weblog

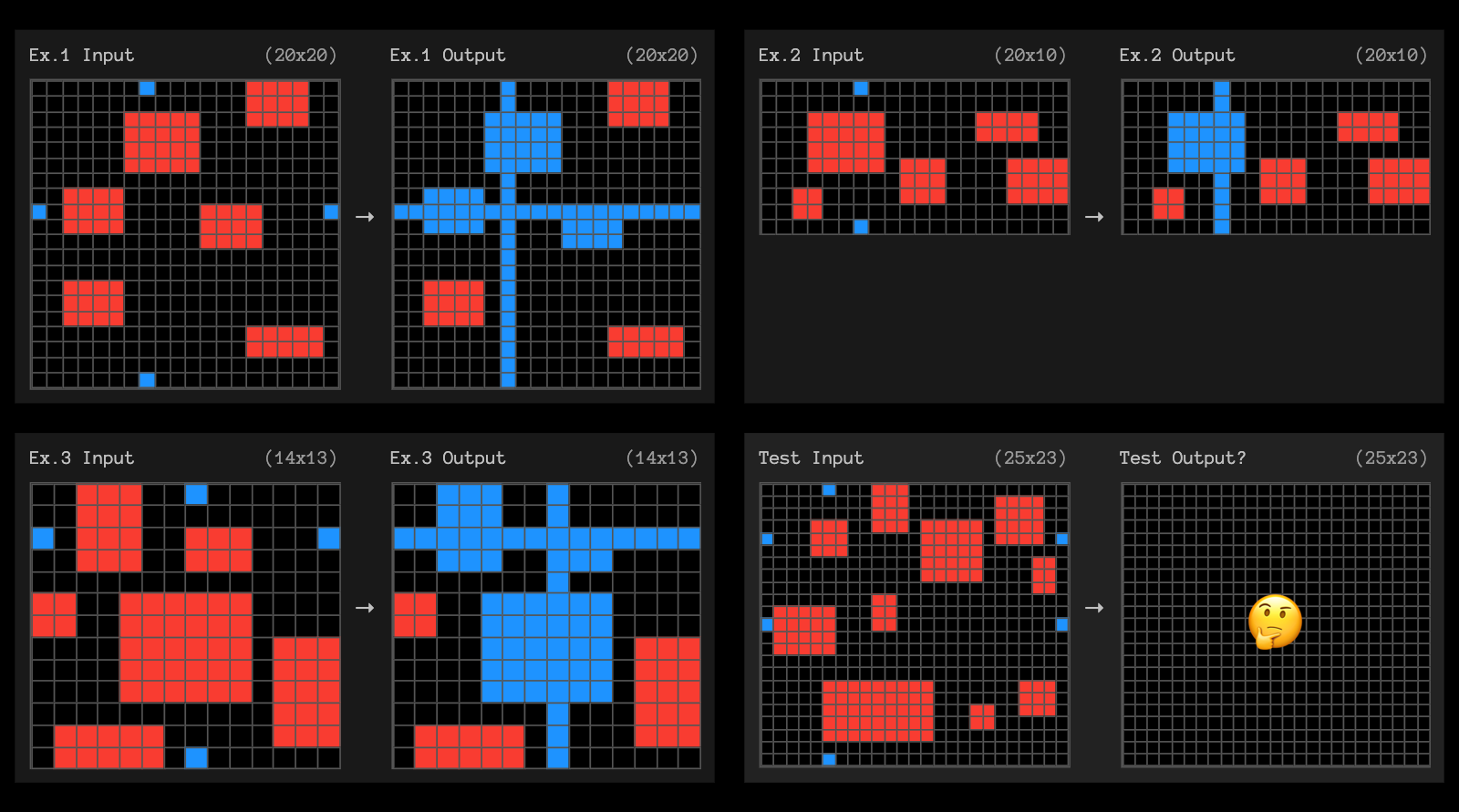

OpenAI o3 breakthrough high score on ARC-AGI-PUB

François Chollet is the co-founder of the ARC Prize and had advanced access to today’s o3 results. His article here is the most insightful coverage I’ve seen of o3, going beyond just the benchmark results to talk about what this all means for the field in general.One fascinating detail: it cost $6,677 to run o3 in “high efficiency” mode against the 400 public ARC-AGI puzzles for a score of 82.8%, and an undisclosed amount of money to run the “low efficiency” mode model to score 91.5%. A note says:

o3 high-compute costs not available as pricing and feature availability is still TBD. The amount of compute was roughly 172x the low-compute configuration.

So we can get a ballpark estimate here in that 172 * $6,677 = $1,148,444!

Here’s how François explains the likely mechanisms behind o3, which reminds me of how a brute-force chess computer might work.

For now, we can only speculate about the exact specifics of how o3 works. But o3’s core mechanism appears to be natural language program search and execution within token space – at test time, the model searches over the space of possible Chains of Thought (CoTs) describing the steps required to solve the task, in a fashion perhaps not too dissimilar to AlphaZero-style Monte-Carlo tree search. In the case of o3, the search is presumably guided by some kind of evaluator model. To note, Demis Hassabis hinted back in a June 2023 interview that DeepMind had been researching this very idea – this line of work has been a long time coming.

So while single-generation LLMs struggle with novelty, o3 overcomes this by generating and executing its own programs, where the program itself (the CoT) becomes the artifact of knowledge recombination. Although this is not the only viable approach to test-time knowledge recombination (you could also do test-time training, or search in latent space), it represents the current state-of-the-art as per these new ARC-AGI numbers.

Effectively, o3 represents a form of deep learning-guided program search. The model does test-time search over a space of “programs” (in this case, natural language programs – the space of CoTs that describe the steps to solve the task at hand), guided by a deep learning prior (the base LLM). The reason why solving a single ARC-AGI task can end up taking up tens of millions of tokens and cost thousands of dollars is because this search process has to explore an enormous number of paths through program space – including backtracking.

I’m not sure if o3 (and o1 and similar models) even qualifies as an LLM any more - there’s clearly a whole lot more going on here than just next-token prediction.

On the question of if o3 should qualify as AGI (whatever that might mean):

Passing ARC-AGI does not equate to achieving AGI, and, as a matter of fact, I don’t think o3 is AGI yet. o3 still fails on some very easy tasks, indicating fundamental differences with human intelligence.

Furthermore, early data points suggest that the upcoming ARC-AGI-2 benchmark will still pose a significant challenge to o3, potentially reducing its score to under 30% even at high compute (while a smart human would still be able to score over 95% with no training).

The post finishes with examples of the puzzles that o3 didn’t manage to solve, including this one which reassured me that I can still solve at least some puzzles that couldn’t be handled with thousands of dollars of GPU compute!

<p>Tags: <a href="https://simonwillison.net/tags/inference-scaling">inference-scaling</a>, <a href="https://simonwillison.net/tags/generative-ai">generative-ai</a>, <a href="https://simonwillison.net/tags/openai">openai</a>, <a href="https://simonwillison.net/tags/o3">o3</a>, <a href="https://simonwillison.net/tags/francois-chollet">francois-chollet</a>, <a href="https://simonwillison.net/tags/ai">ai</a>, <a href="https://simonwillison.net/tags/llms">llms</a></p> https://simonwillison.net/2024/Dec/20/openai-o3-breakthrough/#atom-everything

Why Disney Stopped Subscriptions on the App Store

date: 2024-12-20, from: Michael Tsai

Ariel Michaeli (October 2024): I see Disney’s choice of leaving the App Store as a long-term mistake that would cost them even more than the 30% they were giving Apple. Ariel Michaeli: Now that we have enough MRR data I think the reason is a bit clearer - and it isn’t just about fees.[…]In November, […]

https://mjtsai.com/blog/2024/12/20/why-disney-stopped-subscriptions-on-the-app-store/

Provenance Rejected From the App Store

date: 2024-12-20, from: Michael Tsai

leazhito: Around 4 hours ago developer posted that the app was once again rejected by Apple for weird reasons regarding adding games during testing.They later posted that they submitted another appeal.And shortly after this (see image) thread of two tweets mentions they have seemingly ran out of money due to Apple’s decision making and that […]

https://mjtsai.com/blog/2024/12/20/provenance-rejected-from-the-app-store/

Apple Sued for Not Searching iCloud for CSAM

date: 2024-12-20, from: Michael Tsai

Ashley Belanger: Thousands of victims have sued Apple over its alleged failure to detect and report illegal child pornography, also known as child sex abuse materials (CSAM).The proposed class action comes after Apple scrapped a controversial CSAM-scanning tool last fall that was supposed to significantly reduce CSAM spreading in its products. Apple defended its decision […]

https://mjtsai.com/blog/2024/12/20/apple-sued-for-not-searching-icloud-for-csam/

Swift Concurrency in Real Apps

date: 2024-12-20, from: Michael Tsai

Bryan Jones: Consider this code, wherein we create a custom NSTableColumn that uses an image instead of a String as its header. Holly Borla posted a fix that special-cases NSObject.init(): Now, overriding NSObject.init() within a @MainActor-isolated type is difficult-to-impossible, especially if you need to call an initializer from an intermediate superclass that is also @MainActor-isolated. […]

https://mjtsai.com/blog/2024/12/20/swift-concurrency-in-real-apps/

Lilbits: Intel’s new (and upcoming) low-power chips, Lenovo’s new handhelds, and a wireless mouse dongle that’s also a tiny USB-C dock

date: 2024-12-20, from: Liliputing

A growing number of mini PC makers are starting to ship entry-level systems with cheap, low-power Intel N150 Twin Lake processors rather than the Intel N100 Alder Lake-N chips that have been popular for the past two years. On paper the new processor is basically what you get if you take an Intel N100 and […]

The post Lilbits: Intel’s new (and upcoming) low-power chips, Lenovo’s new handhelds, and a wireless mouse dongle that’s also a tiny USB-C dock appeared first on Liliputing.

Quoting François Chollet

date: 2024-12-20, updated: 2024-12-20, from: Simon Willison’s Weblog

OpenAI’s new o3 system - trained on the ARC-AGI-1 Public Training set - has scored a breakthrough 75.7% on the Semi-Private Evaluation set at our stated public leaderboard $10k compute limit. A high-compute (172x) o3 configuration scored 87.5%.

This is a surprising and important step-function increase in AI capabilities, showing novel task adaptation ability never seen before in the GPT-family models. For context, ARC-AGI-1 took 4 years to go from 0% with GPT-3 in 2020 to 5% in 2024 with GPT-4o. All intuition about AI capabilities will need to get updated for o3.

— François Chollet, Co-founder, ARC Prize

<p>Tags: <a href="https://simonwillison.net/tags/o1">o1</a>, <a href="https://simonwillison.net/tags/generative-ai">generative-ai</a>, <a href="https://simonwillison.net/tags/inference-scaling">inference-scaling</a>, <a href="https://simonwillison.net/tags/francois-chollet">francois-chollet</a>, <a href="https://simonwillison.net/tags/ai">ai</a>, <a href="https://simonwillison.net/tags/llms">llms</a>, <a href="https://simonwillison.net/tags/openai">openai</a>, <a href="https://simonwillison.net/tags/o3">o3</a></p> https://simonwillison.net/2024/Dec/20/francois-chollet/#atom-everything

GMK EVO-X1 mini PC with Ryzen AI 9 HX 370 now available for pre-order for $920 and up

date: 2024-12-20, from: Liliputing

The GMK EVO-X1 HX 370 is a small desktop computer that features USB4, OcuLink, and 2.5 GbE LAN ports, 32GB of LPDDR5x-7500 onboard memory, and three M.2 slots for solid state storage. It’s also one of the first mini PCs to feature an AMD Ryzen AI 9 HX 370 “Strix Point” processor. First unveiled earlier this […]

The post GMK EVO-X1 mini PC with Ryzen AI 9 HX 370 now available for pre-order for $920 and up appeared first on Liliputing.

@Dave Winer’s linkblog (date: 2024-12-20, from: Dave Winer’s linkblog)

NY Democrat: "Elon Musk has Donald Trump in a vise."

https://thehill.com/homenews/administration/5050368-musk-directing-trump-administration/

@Dave Winer’s linkblog (date: 2024-12-20, from: Dave Winer’s linkblog)

Kara Swisher Wants to Take The Washington Post Off Jeff Bezos’ Hands.

https://www.thedailybeast.com/kara-swisher-wants-to-take-the-washington-post-off-jeff-bezos-hands/

Demo: Expanso Cloud

date: 2024-12-20, from: Bacalhau Blog

We show off the power and ease of creating Bacalhau networks with a managed orchestrator at its heart

https://blog.bacalhau.org/p/demo-expanso-cloud

Daily Deals (12-20-2024)

date: 2024-12-20, from: Liliputing

The Steam Winter Sale kicked off this week and runs through January 2nd, with discounts on hundreds of PC games. You can also find some freebies. Meanwhile, rival game stores including GOG and Epic are running their own sales and Amazon Prime members can score a bunch of free games this month (or stream titles […]

The post Daily Deals (12-20-2024) appeared first on Liliputing.

https://liliputing.com/daily-deals-12-20-2024/

Live blog: the 12th day of OpenAI - “Early evals for OpenAI o3”

date: 2024-12-20, updated: 2024-12-20, from: Simon Willison’s Weblog

It’s the final day of OpenAI’s 12 Days of OpenAI launch series, and since I built a live blogging system a couple of months ago I’ve decided to roll it out again to provide live commentary during the half hour event, which kicks off at 10am San Francisco time.

Here’s the video on YouTube.

<p>Tags: <a href="https://simonwillison.net/tags/ai">ai</a>, <a href="https://simonwillison.net/tags/openai">openai</a>, <a href="https://simonwillison.net/tags/prompt-injection">prompt-injection</a>, <a href="https://simonwillison.net/tags/generative-ai">generative-ai</a>, <a href="https://simonwillison.net/tags/llms">llms</a>, <a href="https://simonwillison.net/tags/o1">o1</a>, <a href="https://simonwillison.net/tags/inference-scaling">inference-scaling</a>, <a href="https://simonwillison.net/tags/o3">o3</a></p> https://simonwillison.net/2024/Dec/20/live-blog-the-12th-day-of-openai/#atom-everything

Behind the Blog: Posting Through It

date: 2024-12-20, from: 404 Media Group

This is Behind the Blog, where we share our behind-the-scenes thoughts about how a few of our top stories of the week came together. This week, we discuss our top games of the year, air traffic control, and posting through it.

https://www.404media.co/behind-the-blog-posting-through-it/

Reviving old tech with new tech: A $0.03 RISC-V microcontroller brings an Acer N30 PDA back to life

date: 2024-12-20, from: Liliputing

The Acer N30 is a PDA released in 2004 that shipped with a 240 x 320 pixel resistive touchscreen display, a 266 MHz Samsung S3C2410 processor, and Windows Mobile 2003 software. It’s been out of production for nearly two decades, but could still be used as a mobile devices for taking notes, playing games, and […]

The post Reviving old tech with new tech: A $0.03 RISC-V microcontroller brings an Acer N30 PDA back to life appeared first on Liliputing.

@IIIF Mastodon feed (date: 2024-12-20, from: IIIF Mastodon feed)

Join us in the new year for "HDR Images via Image API."

We’ll welcome Christian Mahnke to demo a proof of concept for a

#IIIF

Image API endpoint with UltraHDR tiles & present a proposal to

indicate technical rendering hints to Image API clients.

Zoom info 👉: iiif.io/community

https://glammr.us/@IIIF/113685726572804475

Not The Bear Shown

date: 2024-12-20, updated: 2024-12-20, from: One Foot Tsunami

https://onefoottsunami.com/2024/12/20/not-the-bear-shown/

@Dave Winer’s linkblog (date: 2024-12-20, from: Dave Winer’s linkblog)

You can favor AOC without making it about age. And know there are people listening who tune you out at the first sign of that uniquely Democratic Party hypocrisy. (Could have something to do with losing elections too, btw.)

https://www.slowboring.com/p/aoc-deserved-the-oversight-job?r=etla&triedRedirect=true

@Dave Winer’s linkblog (date: 2024-12-20, from: Dave Winer’s linkblog)

What would a government shutdown mean for you?

https://www.poynter.org/fact-checking/2024/why-is-the-government-shutting-down/

@Dave Winer’s linkblog (date: 2024-12-20, from: Dave Winer’s linkblog)

Staffers at The New York Times on the Books They Enjoyed in 2024.

https://www.nytimes.com/2024/12/20/books/review/staff-picks-books.html

@Dave Winer’s linkblog (date: 2024-12-20, from: Dave Winer’s linkblog)

After reading a few articles about Mike McCue’s announced Surf product I asked meta.ai to explain how it’s different from social web app like threads, Bluesky, twitter.

https://bsky.app/profile/scripting.com/post/3ldq6zu5oon23

@Dave Winer’s linkblog (date: 2024-12-20, from: Dave Winer’s linkblog)

The Ghosts in the Machine, by Liz Pelly.

https://harpers.org/archive/2025/01/the-ghosts-in-the-machine-liz-pelly-spotify-musicians/

December in LLMs has been a lot

date: 2024-12-20, updated: 2024-12-20, from: Simon Willison’s Weblog

I had big plans for December: for one thing, I was hoping to get to an actual RC of Datasette 1.0, in preparation for a full release in January. Instead, I’ve found myself distracted by a constant barrage of new LLM releases.

On December 4th Amazon introduced the Amazon Nova family of multi-modal models - clearly priced to compete with the excellent and inexpensive Gemini 1.5 series from Google. I got those working with LLM via a new llm-bedrock plugin.

The next big release was Llama 3.3 70B-Instruct, on December 6th. Meta claimed that this 70B model was comparable in quality to their much larger 405B model, and those claims seem to hold weight.

I wrote about how I can now run a GPT-4 class model on my laptop - the same laptop that was running a GPT-3 class model just 20 months ago.

Llama 3.3 70B has started showing up from API providers now, including super-fast hosted versions from both Groq (276 tokens/second) and Cerebras (a quite frankly absurd 2,200 tokens/second). If you haven’t tried Val Town’s Cerebras Coder demo you really should.

I think the huge gains in model efficiency are one of the defining stories of LLMs in 2024. It’s not just the local models that have benefited: the price of proprietary hosted LLMs has dropped through the floor, a result of both competition between vendors and the increasing efficiency of the models themselves.

Last year the running joke was that every time Google put out a new Gemini release OpenAI would ship something more impressive that same day to undermine them.

The tides have turned! This month Google shipped four updates that took the wind out of OpenAI’s sails.

The first was gemini-exp-1206 on December 6th, an experimental model that jumped straight to the top of some of the leaderboards. Was this our first glimpse of Gemini 2.0?

That was followed by Gemini 2.0 Flash on December 11th, the first official release in Google’s Gemini 2.0 series. The streaming support was particularly impressive, with https://aistudio.google.com/live demonstrating streaming audio and webcam communication with the multi-modal LLM a full day before OpenAI released their own streaming camera/audio features in an update to ChatGPT.

Then this morning Google shipped Gemini 2.0 Flash “Thinking mode”, their version of the inference scaling technique pioneered by OpenAI’s o1. I did not expect Gemini to ship a version of that before 2024 had even ended.

OpenAI have one day left in their 12 Days of OpenAI event. Previous highlights have included the full o1 model (an upgrade from o1-preview) and o1-pro, Sora (later upstaged a week later by Google’s Veo 2), Canvas (with a confusing second way to run Python), Advanced Voice with video streaming and Santa and a very cool new WebRTC streaming API, ChatGPT Projects (pretty much a direct lift of the similar Claude feature) and the 1-800-CHATGPT phone line.

Tomorrow is the last day. I’m not going to try to predict what they’ll launch, but I imagine it will be something notable to close out the year.

Blog entries

- Gemini 2.0 Flash “Thinking mode”

- Building Python tools with a one-shot prompt using uv run and Claude Projects

- Gemini 2.0 Flash: An outstanding multi-modal LLM with a sci-fi streaming mode

- ChatGPT Canvas can make API requests now, but it’s complicated

- I can now run a GPT-4 class model on my laptop

- Prompts.js

- First impressions of the new Amazon Nova LLMs (via a new llm-bedrock plugin)

- Storing times for human events

- Ask questions of SQLite databases and CSV/JSON files in your terminal

Releases

-

llm-gemini

0.8 - 2024-12-19

LLM plugin to access Google’s Gemini family of models -

datasette-enrichments-slow

0.1 - 2024-12-18

An enrichment on a slow loop to help debug progress bars -

llm-anthropic

0.11 - 2024-12-17

LLM access to models by Anthropic, including the Claude series -

llm-openrouter

0.3 - 2024-12-08

LLM plugin for models hosted by OpenRouter -

prompts-js

0.0.4 - 2024-12-08

async alternatives to browser alert() and prompt() and confirm() -

datasette-enrichments-llm

0.1a0 - 2024-12-05

Enrich data by prompting LLMs -

llm

0.19.1 - 2024-12-05

Access large language models from the command-line -

llm-bedrock

0.4 - 2024-12-04

Run prompts against models hosted on AWS Bedrock -

datasette-queries

0.1a0 - 2024-12-03

Save SQL queries in Datasette -

datasette-llm-usage

0.1a0 - 2024-12-02

Track usage of LLM tokens in a SQLite table -

llm-mistral

0.9 - 2024-12-02

LLM plugin providing access to Mistral models using the Mistral API -

llm-claude-3

0.10 - 2024-12-02

LLM plugin for interacting with the Claude 3 family of models -

datasette

0.65.1 - 2024-11-29

An open source multi-tool for exploring and publishing data -

sqlite-utils-ask

0.2 - 2024-11-24

Ask questions of your data with LLM assistance -

sqlite-utils

3.38 - 2024-11-23

Python CLI utility and library for manipulating SQLite databases

TILs

- Fixes for datetime UTC warnings in Python - 2024-12-12

- Publishing a simple client-side JavaScript package to npm with GitHub Actions - 2024-12-08

- GitHub OAuth for a static site using Cloudflare Workers - 2024-11-29

<p>Tags: <a href="https://simonwillison.net/tags/google">google</a>, <a href="https://simonwillison.net/tags/ai">ai</a>, <a href="https://simonwillison.net/tags/weeknotes">weeknotes</a>, <a href="https://simonwillison.net/tags/openai">openai</a>, <a href="https://simonwillison.net/tags/generative-ai">generative-ai</a>, <a href="https://simonwillison.net/tags/chatgpt">chatgpt</a>, <a href="https://simonwillison.net/tags/llms">llms</a>, <a href="https://simonwillison.net/tags/gemini">gemini</a>, <a href="https://simonwillison.net/tags/o1">o1</a>, <a href="https://simonwillison.net/tags/inference-scaling">inference-scaling</a></p> https://simonwillison.net/2024/Dec/20/december-in-llms-has-been-a-lot/#atom-everything

Building effective agents

date: 2024-12-20, updated: 2024-12-20, from: Simon Willison’s Weblog

My principal complaint about the term “agents” is that while it has many different potential definitions most of the people who use it seem to assume that everyone else shares and understands the definition that they have chosen to use.This outstanding piece by Erik Schluntz and Barry Zhang at Anthropic bucks that trend from the start, providing a clear definition that they then use throughout.

They discuss “agentic systems” as a parent term, then define a distinction between “workflows” - systems where multiple LLMs are orchestrated together using pre-defined patterns - and “agents”, where the LLMs “dynamically direct their own processes and tool usage”. This second definition is later expanded with this delightfully clear description:

Agents begin their work with either a command from, or interactive discussion with, the human user. Once the task is clear, agents plan and operate independently, potentially returning to the human for further information or judgement. During execution, it’s crucial for the agents to gain “ground truth” from the environment at each step (such as tool call results or code execution) to assess its progress. Agents can then pause for human feedback at checkpoints or when encountering blockers. The task often terminates upon completion, but it’s also common to include stopping conditions (such as a maximum number of iterations) to maintain control.

That’s a definition I can live with!

They also introduce a term that I really like: the augmented LLM. This is an LLM with augmentations such as tools - I’ve seen people use the term “agents” just for this, which never felt right to me.

The rest of the article is the clearest practical guide to building systems that combine multiple LLM calls that I’ve seen anywhere.

Most of the focus is actually on workflows. They describe five different patterns for workflows in detail:

- Prompt chaining, e.g. generating a document and then translating it to a separate language as a second LLM call

- Routing, where an initial LLM call decides which model or call should be used next (sending easy tasks to Haiku and harder tasks to Sonnet, for example)

- Parallelization, where a task is broken up and run in parallel (e.g. image-to-text on multiple document pages at once) or processed by some kind of voting mechanism

- Orchestrator-workers, where a orchestrator triggers multiple LLM calls that are then synthesized together, for example running searches against multiple sources and combining the results

- Evaluator-optimizer, where one model checks the work of another in a loop

These patterns all make sense to me, and giving them clear names makes them easier to reason about.

When should you upgrade from basic prompting to workflows and then to full agents? The authors provide this sensible warning:

When building applications with LLMs, we recommend finding the simplest solution possible, and only increasing complexity when needed. This might mean not building agentic systems at all.

But assuming you do need to go beyond what can be achieved even with the aforementioned workflow patterns, their model for agents may be a useful fit:

Agents can be used for open-ended problems where it’s difficult or impossible to predict the required number of steps, and where you can’t hardcode a fixed path. The LLM will potentially operate for many turns, and you must have some level of trust in its decision-making. Agents’ autonomy makes them ideal for scaling tasks in trusted environments.

The autonomous nature of agents means higher costs, and the potential for compounding errors. We recommend extensive testing in sandboxed environments, along with the appropriate guardrails

They also warn against investing in complex agent frameworks before you’ve exhausted your options using direct API access and simple code.

The article is accompanied by a brand new set of cookbook recipes illustrating all five of the workflow patterns. The Evaluator-Optimizer Workflow example is particularly fun, setting up a code generating prompt and an code reviewing evaluator prompt and having them loop until the evaluator is happy with the result.

<p><small></small>Via <a href="https://x.com/HamelHusain/status/1869935867940540596">Hamel Husain</a></small></p>

<p>Tags: <a href="https://simonwillison.net/tags/prompt-engineering">prompt-engineering</a>, <a href="https://simonwillison.net/tags/anthropic">anthropic</a>, <a href="https://simonwillison.net/tags/generative-ai">generative-ai</a>, <a href="https://simonwillison.net/tags/llm-tool-use">llm-tool-use</a>, <a href="https://simonwillison.net/tags/ai">ai</a>, <a href="https://simonwillison.net/tags/llms">llms</a>, <a href="https://simonwillison.net/tags/ai-agents">ai-agents</a></p> https://simonwillison.net/2024/Dec/20/building-effective-agents/#atom-everything

Quoting Marcus Hutchins

date: 2024-12-20, updated: 2024-12-20, from: Simon Willison’s Weblog

50% of cybersecurity is endlessly explaining that consumer VPNs don’t address any real cybersecurity issues. They are basically only useful for bypassing geofences and making money telling people they need to buy a VPN.

Man-in-the-middle attacks on Public WiFi networks haven’t been a realistic threat in a decade. Almost all websites use encryption by default, and anything of value uses HSTS to prevent attackers from downgrading / disabling encryption. It’s a non issue.

<p>Tags: <a href="https://simonwillison.net/tags/encryption">encryption</a>, <a href="https://simonwillison.net/tags/vpn">vpn</a>, <a href="https://simonwillison.net/tags/https">https</a>, <a href="https://simonwillison.net/tags/security">security</a></p> https://simonwillison.net/2024/Dec/20/marcus-hutchins/#atom-everything

@Dave Winer’s linkblog (date: 2024-12-20, from: Dave Winer’s linkblog)

Nate Bargatze: The baffling rise of the most inoffensive comedian alive.

Understanding Computer Networks by Analogy

date: 2024-12-20, from: Memo Garcia blog

I’m writing this for the version of me back in university who struggled to grasp networking concepts. This isn’t a full map of the networking world, but it’s a starting point. If you’re also finding it tricky to understand some of the ideas that make the internet works, I hope this helps.

I’m sticking with analogies here instead of going deep into technical terms—you can find those easily anywhere. I just enjoy looking at the world from different perspectives. It’s fascinating how many connections you can spot when you approach things from a new angle.

https://memo.mx/posts/understanding-computer-networks-by-analogy/

@Dave Winer’s linkblog (date: 2024-12-20, from: Dave Winer’s linkblog)

The GOP Is Treating Musk Like He’s in Charge.

Go Developer Survey 2024 H2 Results

date: 2024-12-20, updated: 2024-12-20, from: Go language blog

What we learned from our 2024 H2 developer survey